AI impact to software engineering jobs

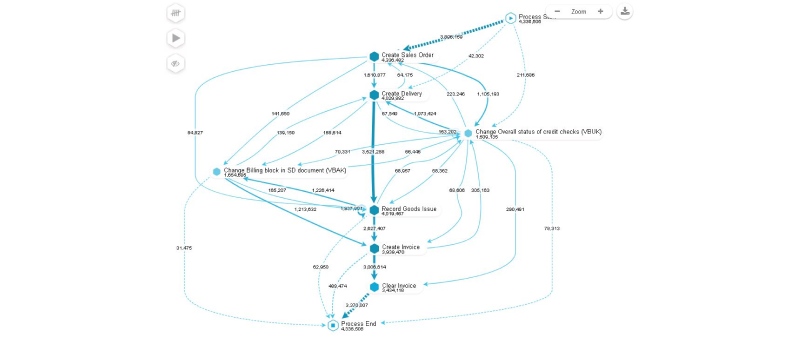

Anecdotes are great but what does the data say? Let us see some interesting insights that come out of analyzing 20M job postings over 16 months.

Key Findings

- Overall software engineering job postings have remained stable

- There is a significant shift in required skills

- AI/ML skills are increasingly in demand

Detailed Analysis

Job Market Trends

- AI and ML Engineers: Showing strongest growth in demand, leading the market

- Front-end Engineers and Data Engineers: Experiencing significant decline in demand

- Data Scientists: Demonstrating resilience with stable demand levels

Salary Insights

- Salary ranges remain relatively flat when adjusted for inflation

- Current market supply suggests limited potential for significant salary increases in the near term

Most In-Demand Skills

-

NLP and LLM Technologies

- Natural Language Processing emerges as the most desired skillset

- LLM-related skills, particularly chatbot development, showing exponential growth

-

Programming Languages

- Rust: Gaining significant momentum in the market

- React: Taking substantial market share from Angular in front-end development

- Python: Maintains its position as the de facto language for ML development

Tech Company Hiring Patterns

- Large tech companies that previously conducted layoffs are now actively hiring again

- Hiring patterns show balanced recruitment across all roles, not just AI positions

- Evidence suggests focus on talent upgrade and correction of previous hiring practices from 2021

Key Takeaway

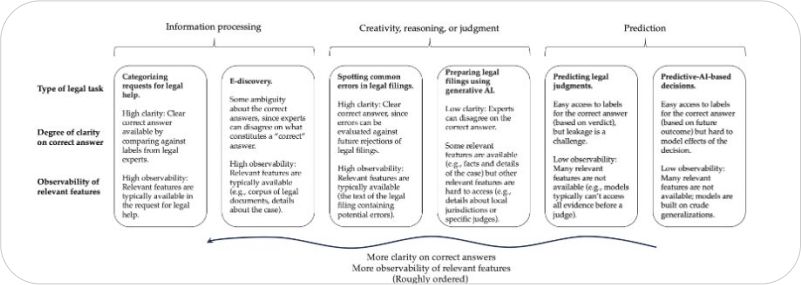

The data presents a clear message for both new graduates and experienced software engineers: incorporating AI skills into your toolkit is becoming increasingly important for career growth and marketability.

Source: Analysis based on 20M job postings over a 16-month period. Original data